The symbiosis of innovation and efficiency in supercomputing

Energy efficiency, or the management of heat generation and dissipation, has been one of the main drivers for developments and innovation in both consumer computing and supercomputing technologies. Jean-Christophe Desplat, Director of the Irish Centre for High-End Computing (ICHEC), writes.

In the world of supercomputing, or High Performance Computing (HPC), the primary goal is to make the best use of powerful computer systems to carry out large amounts of number crunching for diverse research and industrial purposes. Another way to look at it is that the computing power of a supercomputer is roughly equivalent to thousands or millions of an average desktop computer put together, all connected by a very fast network and working in unison. Hence, running a supercomputer requires large amounts of electrical power. But almost all of this power is turned into heat, which in turn require additional power to cool the components within operating temperatures.

Most of the heat generated from a modern computer originates from its integrated circuits (also commonly known as ‘processors’ or ‘chips’), such as those found in the Central Processing Unit (CPU) and Graphics Processing Unit (GPU). These modern circuits consist of billions and trillions of tightly-packed transistors that have doubled in density roughly every 18 months according to the famous Moore’s Law. Back in the 80s and 90s, this has seen a sharp growth in computing performance just by virtue of manufacturers producing chips with higher and higher transistor counts. While this required corresponding increases in energy consumption, computers ran on the equivalent of up a few 100-watt light bulbs to power the CPUs that had a ‘power density’ (the amount of heat generated per cm2) similar to that of a hot plate. Most of the energy consumed is dedicated to cooling by fans or fluid-based systems.

Management of heat dissipation became more onerous in the mid-noughties, when CPUs had a power density similar to nuclear reactors and overheating became a common problem for the fastest chips. If left to grow at the same rate, it was estimated that the heat generated by a CPU would fall into the same thermal range as rocket exhausts or the surface of the sun! Thus, laws of physics dictate that it was impossible to dissipate that amount of heat for all but the most specialised physical environments. This drove some significant changes and innovations in the design of CPUs.

One of the more prominent targets for energy efficiency is for a processor to only draw as much as power as it needs. In typical settings, one simply does not require all the raw processing power that is available, e.g. when you are checking your e-mail and browsing web pages on a computer. Modern processors, therefore, include power management features that can adjust their voltage and/or clock speed to maintain a trade-off between power draw and performance. While this enables lower performance to save power, it does not directly address the challenge of how one could get more performance out of processors without the aforementioned heat dissipation issues.

Engineers looked to ‘multi-core’ CPUs, which had been invented in the 80s but remained largely until then within the realm of large corporation mainframes, in order to determine its potential for consumer applications. In essence, a multi-core CPU contains two or more nearly identical processors or ‘cores’ in the same chip. But crucially, doubling the number of cores (i.e. for a dual-core chip with two cores) can result in 1.8 times the performance while consuming the same power as the equivalent single-core chip; even though the dual-core chip contains slightly slower cores. This not only allows for gains in performance with minimal extra energy consumption, but multiple cores are particularly suited for multi-tasking (different workloads can be handled simultaneously by separate cores) and power management (cores can be turned off if not needed). This heralded a new era in processor design for consumer technologies, where most processors found today in smartphones, laptops and workstations contain multiple cores with advanced power management features.

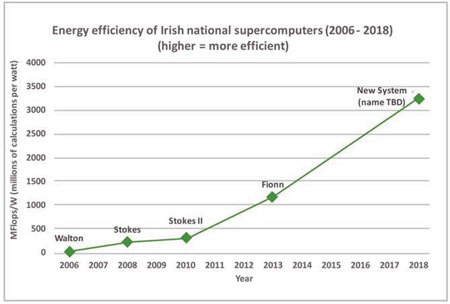

Not surprisingly, supercomputers have also benefitted from the energy efficiency of modern, multi-core CPUs. In September 2018, Irish researchers have access to a newly-installed supercomputer, the largest national system for scientific research managed by the Irish Centre for High-End Computing.The new machine, to be named later in the year, is five times more powerful than its predecessor (called ‘Fionn’) but will only use up to 1.5 times more electricity. One can see the progressive gains in efficiency of all the Irish national supercomputers managed by ICHEC over 2006-2018 (see Figure), where over 100 times more computational work can be done per unit of energy compared to 12 years ago.

There are serious and ambitious plans in Europe to develop world-class exascale systems based on European technologies by 2023… This represents a huge opportunity for Ireland to join a pan-European effort…”

For the last decade or so, the HPC community have already been making use of even more energy efficient, many-core technologies, i.e. CPUs are coupled with ‘accelerator’ cards with hundreds or thousands of small processing cores to carry out incredible feats of number crunching. ICHEC has been widely recognised for its contributions to many-core technology in partnership with major companies in the sector such as Intel, Nivida and Xilinx. Our work mainly focuses on how software applications can make the best use out of such hardware. Like other technologies, advances made in the supercomputing domain tend to trickle down to consumer applications and adaptations further down the line.

Regardless of the huge progress made, the world’s largest supercomputers still need a lot of power. The top system, called ‘Summit’ and hosted at the US Oak Ridge National Laboratory, requires about 15 megawatts of electricity – enough juice for all residential homes in Galway city – for its approx. 2.3 million compute cores that is capable of 122 petaflops (where one petaflop equals one quadrillion calculations per second, a measure of raw compute performance).

In the meantime, there are huge efforts globally to achieve exascale computing, or building a system 10 times as powerful as Summit capable of one exaflop (one quintillion calculations per second) by the turn of the next decade. One of the biggest challenges is that such a system only makes sense economically if its power consumption is 20-30 megawatts – several orders of magnitude improvement in energy efficiency are needed beyond the state-of-the-art today.

There are serious and ambitious plans in Europe to develop world-class exascale systems based on European technologies by 2023, driven by the EuroHPC Joint Undertaking set up by the European Commission. This represents a huge opportunity for Ireland to join a pan-European effort to contribute its expertise and resources to the advancement of ground-breaking exascale technologies, and to benefit from access to these powerful systems in future. With regards to energy efficiency of computers, one can always look for inspiration from biology – it has been postulated that the human brain operates at about one exaflop, yet it only requires about 20W of power.

For more information:

Jean-Christophe Desplat

Director, Irish Centre for High-End Computing

T: 01 524 1608

E: j-c.desplat@ichec.ie